Before we start spending tons of cash to increase internet bandwidth and upgrading network equipment such as servers, switches and access points to resolve network slowness issues, we need to further investigate our network via packet sniffer tools such as WireShark or TCPDump.

Packet Loss

In one of our previous posts, we pointed out the common causes of slow networks including network congestion and link level errors. Network congestion involve going from 10 Gbps link to 1 Gbps link and some examples related to link level errors include FCS (frame check sequence) errors. Packet Sniffer tools such as TCPDump and WireShark can help us identify various issues with TCP packets including no acknowledgement, re-transmission, out of order and dropped packets.

Similarly, network congestion issues need to be identified and network traffic prioritized to resolve network slowness issues. Furthermore, we can use the following features in WireShark to help us further troubleshoot slowness on our network:

Applications That Are Slow to Respond:

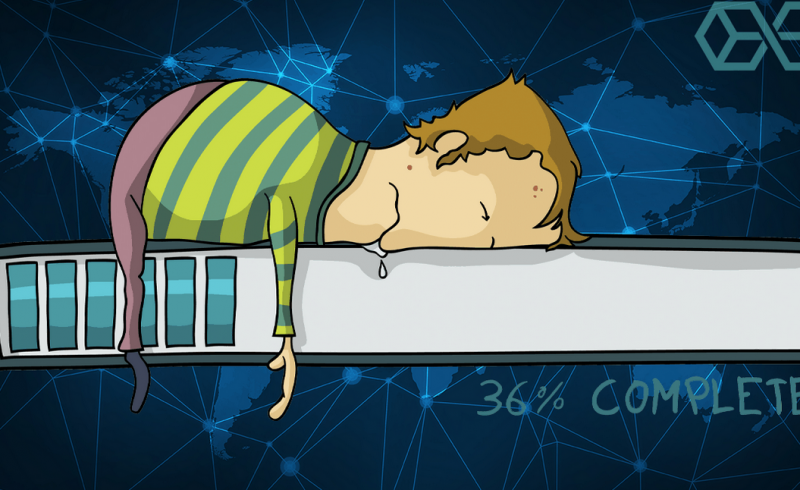

We can determine slowness of application response time by looking at delta times (see illustration above) between application requests sent to the server from the client and the application responses received from server back to the client. This information can be found in trace files captured by WireShark. These trace files can help us point in the right direction and identify that delay was from the server side when it responded from a request made from the client.

Applications That Are Written Poorly:

Majority of the applications are written in lab a environment where there is hardly any network latency involved. But once these same applications start getting used in “real world” situations and gets moved to cloud hosting or data center far away, this as a result causes applications to delay even more.

Too many redirects to access the application can also cause significant delays. So when a client first connects to a server, it may be sent yet to another server to access the data that it requires. The new server may require the client to connect via different port numbers. All these redirects contribute to the overall slowness of the application delivery. The application developer can resolve these issues by improving the coding.

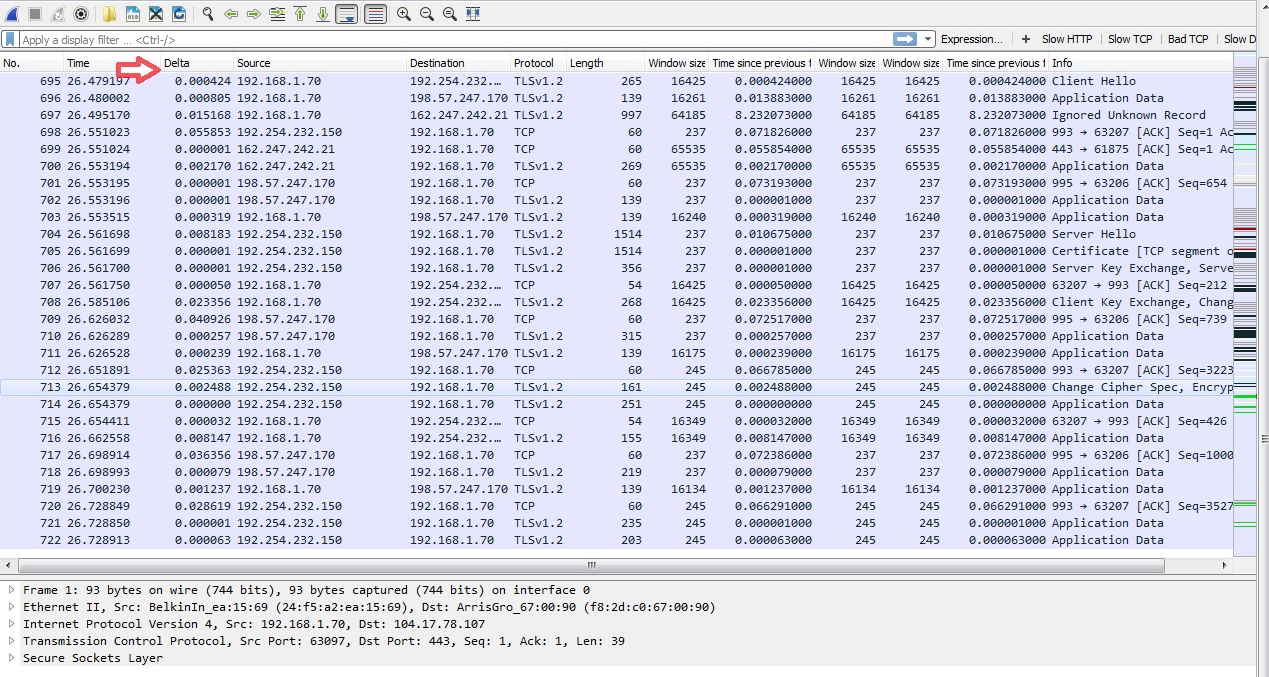

Layer 4 (TCP) Protocol Issues:

In any business, when we are dealing with large file transfers or downloads, we can experience delays. This causes confusion in a way that network engineer say is the responsibility of the server engineer and server engineer thinks that this needs to be taken care by the network engineer.

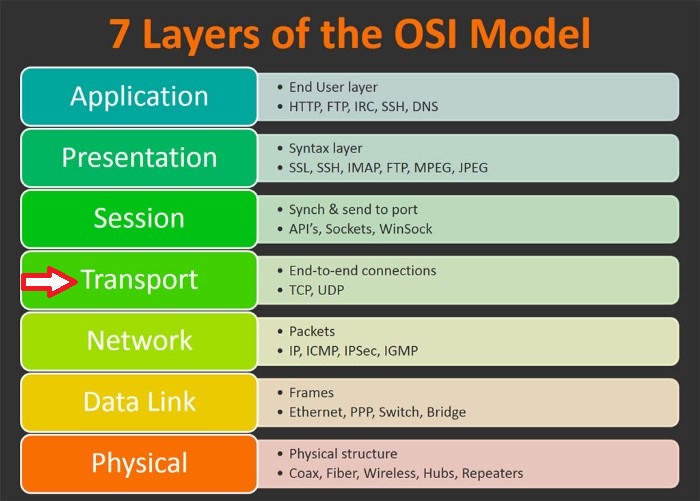

Basically, TCP transmission is controlled by the Layer 4 (Transport)of the OSI model that exists both on the server and client side in a typical network. In a nutshell, in order to move data from one end point to another, Transmission Control Protocol (TCP) will ensure that everything is sent and acknowledged. If necessary, TCP will re-transmit missing data. In a perfect network setup, there would be no network limits, no receive limits and no send limits hence no data packets being dropped. In reality, it does not work this way as Server TCP send window size varies and may be bigger or smaller for that matter than TCP receive window of the client.

In summary, we have several different options at our disposal to take “deeper dive” into our network and resolve bottlenecks causing network slowness. In doing so, we would not only save a lot of time, but money as well!